![[Home]](http://www.bayleshanks.com/cartoonbayle.png)

Table of Contents for Programming Languages: a survey

note: type constructs are not in any chapter here, but instead are covered in Part ?: Type Systems

most OOP stuff is duplicated elsewhere, but..

http://journal.stuffwithstuff.com/2013/08/26/what-is-open-recursion/

-- Bob Nystrom

Classes which can't be instantiated, but exist to be subclasses.

Some languages allow code in base classes to refer to members which aren't defined in the base class, causing hidden requirements on the subclass to define those members (or override the base class code). e.g. http://docs.scala-lang.org/tutorials/tour/abstract-types.html

Some languages without interfaces use abstract base classes as a standin for interfaces, e.g. consumers of the class refer to the abstract base class, and then any descendent of the abstract base class will typecheck against these consumers. This is most helpful with multiple inheritance, as one object may need to implement multiple interfaces.

avoid one sort of future-proofing

some languages, e.g. C++ and scala, have special syntax for declaring constructors which take parameters and simply store them with the object. In e.g. C++, the constructor value are stored in ordinary fields. In e.g. Scala, the constructor's formal parameters are simply made 'visible in the whole body of the class' -- http://docs.scala-lang.org/tutorials/tour/classes.html (to is this really different from fields? are these parameters immutable values, rather than variables?)

some languages create an implicit constructor function with the same name as the class, e.g. to call the constructor for class Point with two arguments 3 and 4 and assign the resulting object to x, you say "x = Point(3,4)". In other languages, you use a special operator 'new': "x = new Point(3,4)". In some languages (e.g. C++), both of these forms are available, but they do slightly different things (see 'resource management')

in Clojure, 'reify' creates an anonymous instantiation of an interface: http://clojuredocs.org/clojure_core/clojure.core/reify

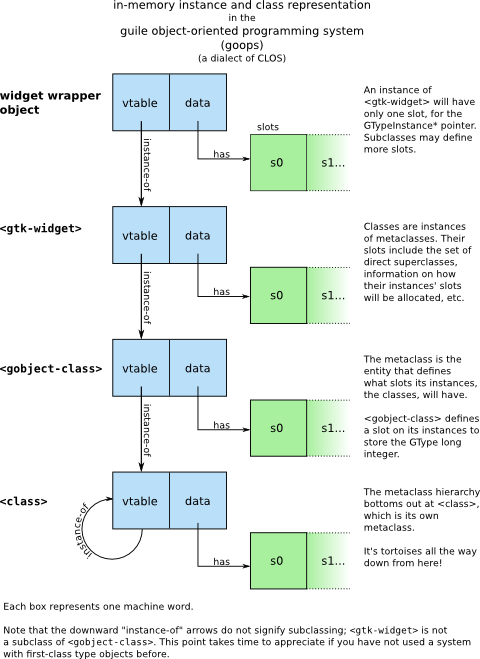

classes as first-class objects

(from http://wingolog.org/archives/2008/04/11/allocate-memory-part-of-n , http://wingolog.org/archives/2009/11/09/class-redefinition-in-guile )

todo should we merge this with extensible code? with oop?

extensibility defined (in contrast to metaprogramming) as removing the need to future-proof

future-proofing defined by Bob Nystrom: adding boilerplate in case, in the future, you need to replace built-in functionality with a user-defined abstraction without having to change each callsite.

http://journal.stuffwithstuff.com/2010/09/18/futureproofing-uniform-access-and-masquerades/ http://journal.stuffwithstuff.com/2010/10/21/the-language-i-wish-go-was/

removing the need to future proof:

"Can I replace built-in functionality with a user-defined abstraction without having to change each callsite?"

remove the need for getters and setters

uniform access principal

(e.g. via subtyping)

See http://stackoverflow.com/questions/2505067/class-self-idiom-in-ruby (this discusses both the 'class <<' operator in general, and the interesting special case of 'class << self')

library if first-class functions

also called vectorized

esp. useful in numeric code dealing with vectors or matrices

in some languages, you might be able to overload an operator for a new value type only at the location of the the declaration of that value type. In others, the operator can be declared in one place, the value type in another, and the overload of that operator for that value in yet another (eg Haskell, where we have typeclasses and typeclass implementations which can be declared in separate places) [1]

e.g. Python

http://www.rafekettler.com/magicmethods.html

"pervasive protocols for built-in types"

example of print statements on numbers; autoconvert to strings

example from http://scalada.blogspot.com/2008/03/implicit-conversions-magical-and.html e.g. add .reverse() operation to String class by creating RichString? class and adding it there, then giving an implicit conversion

potential issue with implicit conversions and equality (example from http://scalada.blogspot.com/2008/03/implicit-conversions-magical-and.html e.g. string1.reverse().reverse() != string1 if .reverse implicitly converts from String to RichString? )

can slow down compilation

e.g. http://stackoverflow.com/questions/3606591/why-does-intellij-idea-compile-scala-so-slowly/3612212#3612212 e.g. "Most likely the influence of the number of implicits in scope is in some way multiplied by the actual number of implicit applications, because at each implicit application the compiler must ensure one and only one implicit “heals” the candidate compiler error. In other words, the compiler can't stop looking at implicits once it finds one that solves the type error at hand; it must keep on looking to make sure one or more other implicits don't also solve that same type error. Thus the compile time cost of implicit use is likely related to the number of implicits in scope multiplied by the number of times the compiler must check all those implicits (i.e., the number of implicit applications). I say “likely,” because as yet we haven't written a script to verify this theory. " -- http://www.artima.com/articles/compile_time.html

because of the issues with implicit conversions, many languages do not support them

scala: limit direct implicit conversions, but can chain via implicit parameters

example from http://stackoverflow.com/questions/5332801/how-can-i-chain-implicits-in-scala/15334997 possibly see http://www.scala-lang.org/old/node/114 for background? dont think its needed tho

todo: read http://stackoverflow.com/questions/5598085/where-does-scala-look-for-implicits and see if its useful

"modularity is all about types" -- http://existentialtype.wordpress.com/2011/04/16/modules-matter-most/

"Modules are about name control and only secondarily, if at all, about types." -- http://existentialtype.wordpress.com/2011/04/16/modules-matter-most/#comment-733

---

forms of import

ML?

public/private/protected vs consenting adults protocols

inheritance

delegation

interface embedding (Go: http://golang.org/doc/effective_go.html#embedding )

Subclassing is an OOP idea where instances of the subclass inherit, by default, all of the fields and methods of the superclass, and then can selectively override some of these methods. The overriding methods have access to the internal fields and methods of the superclass, just as if they were written in the superclass (in some languages e.g. Java this can be restricted by using 'private', as opposed to 'protected', access modifiers). In other words, the overriding methods in the subclass are 'behind' the encapsulation boundary, just like the internal members of the superclass, and as opposed to the clients of the class. In typed languages, any instance of the subclass is considered to be of the type of the subclass, but also to be of the type of the superclass. A key property of subclasses in most OOP languages is the Liskov substitution principle, which states that any instance of a superclass should be able to be replaced by a subclass. This is used to add functionality beyond what is offered by the superclass, or to implement the superclass in a different way.

Composition is a related but different way to achieve the same goals. In composition, instead of having a subclass, you create a class which has a member which contains an object of what would have been the superclass. Then for the psuedo-subclass you write methods wrapping all of the public methods of the superclass. The idea is that you should be able to substitute any instance of the psuedo-subclass for any instance of the psuedo-superclass, e.g. the Liskov Substitution Principal should apply. Now instead of overriding methods in the subclass, you modify the wrapper methods in the psuedo-subclass.

The main advantage of composition is that it is more decoupled. This is because psuedo-subclass is outside the encapsulation boundary of the psuedo-superclass, and does not have access to the psuedo-superclass's non-public fields or methods. When the programmer of a superclass changes its non-public fields or methods, this might break subclasses; but it will not break psuedo-subclasses which only interact with the psuedo-superclass via composition.

Some advantages of subclassing are (a) you don't have to bother to wrap the methods of the (psuedo-)superclass, (b) in most OOP languages, the type system recognizes instances of a subclass as also being of the type of the superclass, but does not recognize objects which extend the superclass by composition as being of its type, and (c) you can access the non-public fields and methods of the superclass. To elaborate on (c), say that you have a class A, and you need to make a class B which is almost exactly the same as A except for one small change. You can make this change by subclassing A and overriding one method which is called from various other methods of class A. Unfortunately, the programmer of A didn't forsee that you might want to change just the part that you do, and so there is no way provided for composing class to just change that part without also reimplementating a large part of A's non-public fields and methods. Using a subclass, you can override the method at issue, and when other methods in the superclass call that method, they'll get your implementation. With composition, you would have to reimplement a lot of code.

(c) may be a little bit confusing because this very same thing is the opposite of what is claimed to be an advantage for composition. The reason is that accessing internal fields and methods of a superclass has both advantages and disadvantages; an advantage is that you can change the behavior of the superclass in a fine-grained way, a disadvantage is that you become tightly coupled to the implementation of the superclass.

"A subject, such as a computer program, is said to be using ambient authority, if it only needs to specify the names of the involved object(s) and the operation to be performed on them in order for a permitted action to succeed.

...

For example, suppose a C program opens a file for read access by executing the call:

open("filename", O_RDONLY, 0)The desired file is designated by its name on the filesystem, which does not by itself include authorising information, so the program is exercising ambient authority.

...

...if the program should be able to access an object when acting on its own behalf but not when acting on behalf of one of its clients (or, on behalf of one client but not another), it has no way to express that intention. This inevitably leads to such programs being subject to the Confused deputy problem.

The term "ambient authority" is used primarily to contrast with capability-based security, in which executing programs receive permissions as they might receive data, as communicated first-class object references. This allows them to determine where the permissions came from, and thus avoid the Confused deputy problem. However, since there are additional requirements for a system to be considered a capability system besides avoiding ambient authority, "non-ambient authority system" is not just a synonym for "capability system". " -- http://en.wikipedia.org/wiki/Ambient_authority

todo: what are the "additional requirements for a system to be considered a capability system besides avoiding ambient authority" mentioned above?

ok i think it's this:

so, for example, in Unix a process with write access to some file might be able to create another process with write access to that file. But it can't give that access permission to a third-party process that it didn't create; and it can't save its capability of accessing the given file to disk for later use.

(todo i think i read something about this somewhere besides wikipedia eg in a blog, which was easier to understand than the wikipedia article? find and link to that)

Links:

Scan and modify untrusted code before executing it to ensure it doesn't do anything you don't want it to.

Links:

Pointers

References

Aliases

variables vs. (immutable) values

and how let can make you break your functions into parts

e.g. scala val

C.O.W.

e.g. Apple Swift 'let'

note: in Apple Swift, "The value of a constant doesn’t need to be known at compile time, but you must assign it a value exactly once." -- https://developer.apple.com/library/prerelease/ios/documentation/Swift/Conceptual/Swift_Programming_Language/GuidedTour.html#//apple_ref/doc/uid/TP40014097-CH2

e.g. scala var

ref and deref

sometimes error if cannot be evaluated at compile time

http://en.cppreference.com/w/cpp/language/raii

why can't Python use RAII for e.g. definitely closing a file handle when it goes out of scope? Because Python's garbage collection is non-deterministic; it is not guarantees that objects will be destroyed (and their destructors called) as soon as the objects go out of scope.

" Closures Because Common Lisp is lexically scoped, when we define a function containing free variables, the system must save copies of the bindings of those variables at the time the function was defined. Such a combination of a function and a set of variable bindings is called a closure. " -- http://ep.yimg.com/ty/cdn/paulgraham/onlisp.pdf

Closures can be used to provide private member variables to objects; see http://javascript.crockford.com/private.html

" upvote

kyllo 34 days ago

| link |

Here's an SML example:

val a = 1 : int

val f = fn : unit -> int

val it = 1 : int

val a = 2 : int

val it = 1 : int a is still bound to 1 as far as f() is concerned

Now in Javascript:

> var a = 1;

undefined

> function f(){return a};

undefined

> f();

1

> a = 2;

2

> f();

2

>

If you bind a val, then you define a function that references that val, then you later shadow the val binding, then call the function again, the function still sees the earlier val binding, because it's a closure of the environment at the point where the function was defined, not at the point where it was called. This is unlike variable assignment in imperative languages. " -- https://news.ycombinator.com/item?id=6278047

http://www.vpri.org/pdf/tr2008003_experimenting.pdf

in expr block: execute block in/upon the world that results from evaluating expr

sprout (copy-on-write from sprout parent world like in languages with prototype inheritance)

commit (write changes from a sprout back to the parent)

watch out for non-uniform auto-initialization policies, which are hard to remember: e.g. in C, static vars (but not other vars) are initialized to 0 by default.

if local vars are kept on a stack, could use separate stacks for the call stack and for variables

if a data stack, could use display registers (many registers, each pointing to one ancestor stack frame) to aid quick lookup of local variables in ancestor scopes

easier to debug stuff than with dynamic scope. Older Lisps used to have dynamic scope, but Scheme and Common Lisp have lexical scope.

note: lexical scope enables closures

http://stackoverflow.com/questions/1753186/dynamic-scoping-deep-binding-vs-shallow-binding http://stackoverflow.com/questions/3869040/dynamic-static-scope-with-deep-shallow-binding-exercises

but http://www.delorie.com/gnu/docs/elisp-manual-21/elisp_150.html

http://stackoverflow.com/questions/1753186/dynamic-scoping-deep-binding-vs-shallow-binding http://stackoverflow.com/questions/3869040/dynamic-static-scope-with-deep-shallow-binding-exercises

but http://www.delorie.com/gnu/docs/elisp-manual-21/elisp_150.html

hoisting of declarations

actually i don't know much about constructs for real-time systems

todo: i don't know all of the languages i list here, must look thru them and make sure they're correctly categorized

todo: lookup which of these are Turing-universal and which aren't and list that here

explain difference between a data model and a data representation language

encapsulation / scope modifiers: prevent you're getting blamed for breaking the build when other ppl depend on code you told them not to depend on (vs; flexibility for others to reuse code without copying; flexibility for binary / type compatibility for existing code)

typing can help other team members when reading each other's code (although it can also make code less concise, if type inference is not used)

typing also helps by having the compiler enforce some architectural constraints, rather than trying to enforce them by social convention

readability becomes more important

languages with too many ways to do things may be problematic; otoh some people say the thing to do is to have the best programmers metaprogram a framework that everyone on the team then uses, and then it's fine

combining string interpolation, formatting, and I/O

e.g. STDOUT in Python, cout in C++

and also resource collection, e.g. finalizers, Python with, C# using

the issue of ordering destrucutors in reference cycles (Python just doesn't call them: http://eli.thegreenplace.net/2009/06/12/safely-using-destructors-in-python/ )

the issue of exceptions thrown within a destructor

C++ allows you to determine if objects are on the stack or on the heap (heap iff created via 'new'). stack-allocated objects are released upon leaving scope (e.g. their destructors are guaranteed to be called then; contrast with Python finalizers which are no guaranteed to be called until garbage collected, which is nondeterministic, e.g. it's like they are always on the heap). C++ also provides 'delete' to delete things created by 'new'

note: python with allows you to implement a context manager using generators and a @contextmanager decorator: http://www.python.org/dev/peps/pep-0343/

RAII

C++'s copy constructors, copy assignment, move constructors, move assignment. Assignments must sometimes consider deallocating the items that were previously in the place that you are moving the new things into.

C++'s new[] and delete[] (recursively calls new and delete for every item in an array)

in C++ construction without 'new' allocated the object on the stack (so that it automatically goes out of scope and is deallocated when the program exits and ascends above the stack frame from which the object was created), and construction with 'new' allocated objects on the heap, from which the programmer must manually use 'delete' to deallocate them.

ffi

calling conventions

data interop

low-level control over memory layout

embeddability

https://en.wikipedia.org/wiki/Domain-specific_language

In most languages, there is information provided in the object code to allow the runtime to tell from where in the source code each part of the object code comes from -- this is invaluable in printing informative runtime error messages

Exposing this feature in the language allows the compilation pipeline to be externally extended without compromising the ability for the runtime to map the object code back to the original source.

For example, JavaScript source maps.

In languages where the code to be run can be dynamically modified at runtime, it may be helpful to provide support for storing the original form of the code before each transformation, for example, in stack frames (e.g. this feature being considered for Wat).

dunno where to put this, but:

there's a similarity between macro application, logic-based production rule firing, and PEG grammar application, in that in these cases you have a control structure that looks over the thing to be matched (a state), applies a set of matching rules to it in order to find a potential match, and then mutates the state according to what the rule tells you to do, which may be a function of the part of state which matched

--

todo: the first few pages in http://users.rcn.com/david-moon/PLOT/ had some good concepts

---

todo: each page in https://tour.golang.org/ tends to introduce a construct

todo: each page in the tour of scala tends to introduce a construct

---

todo: sort this into more chapters

Chapter 24: Syntax Chapter ?: Control Chapter ?: Error handling Chapter ?: Type Chapter ?: Numbers Chapter ?: Strings Chapter ?: Metaprogramming * contains: hacking the call stack, hacking the ENV, operations, hacking classes, syntax, macros etc, eval (todo generalize), misc Chapter ?: Low level Chapter ?: Alternative paradigms motivated by physics Chapter ?: Misc constructs Chapter ?: Other features