![[Home]](http://www.bayleshanks.com/cartoonbayle.png)

---

i guess besides having stuff like PSET for good "zero to pixel" for beginners, we should also aim to provide facilities for 'demoscene' type stuff. I dunno what platforms they have but i assume we need to allow some sort of basic access to modern video and sound:

what APIs are suitable here? Remember, we don't want to include entire modern APIs, just a tiny subset.

for example, this fits in 4k on a modern Windows PC (detail on requirements: [1] ):

https://www.youtube.com/watch?v=9r8pxIogxZ0

I can't find source code for the video aspects of that demo.

As for sound:

On the page [2], apparently the author says: "4klang "sources": http://noby.untergrund.net/4klang-zettai.zip (4klang 3.11, Renoise 2.8.1, provided as is, ugly and messy just like it was made)"

which contains two filesm 'pts-311f.4kp' and 'pts-f.xrns'.

The xrns file is apparently the source for the audio using Renoise, a $75 sound program [3]. But 4klang is another sound program [4]. Apparently these can be used together; another project says:

" The audio is currently being generated by 4klang synth. Music is written in Renoise DAW and exported through the 4klang VSTi plugin, resulting in a 4klang.h file and a 4klang.inc file, which hold useful defines and song data.

The 4klang.inc file should be compiled along with 4klang.asm, which holds the synth impl, together into 4klang.obj, using NASM assembler. The obj file can then be linked to the demo executable. If you want to write your own 4klang song, then you should generate new 4klang.inc and 4klang.h files and assemble the 4klang.inc/4klang.asm into a new 4klang.obj.

Then place 4klang.h and 4klang.obj into the code directory and run build.bat. " [5].

So the question for audio is, what I/O API primitives do 4klang executables access?

Also, presumably there are 'demoscene'-oriented languages and VMs (although maybe not? given the emphasis on small file sizes, mb ppl just usually write in assembly? But the 2017 revision party PC category link above allows DotNet?, although strangely not Java).

Of course, real demoscene stuff uses file compression like [6]. But we aren't trying to support that (at least, not directly), so we don't need to be a good language to write a file compressor in.

so remaining stuff to do here:

---

hmm an isa for randomly generating interesting programs should be both:

what we DON'T need is:

so, eg we don't need many registers, we don't need position-independent code

the word size is nibbles (2 bits), represented using 2s complement. Arithmetic is signed. Von Neumann architecture (self-modifying code is possible).

so here's some ideas:

The 8 three-operand instruction opcodes and mnemonics and operand signatures are:

The 8 instr-two-0 two-operand instruction opcodes and mnemonics and operand signatures are:

The 8 instr-two-1 two-operand instruction opcodes and mnemonics and operand signatures are:

The 8 instr-one-0 one-operand instruction opcodes and mnemonics and operand signatures are:

The 8 instr-one-1 one-operand instruction opcodes and mnemonics and operand signatures are:

The 8 zero-operand instruction opcodes and mnemonics and operand signatures are:

as you can see it gets a little hairy because we want to have lots of different instructions. i'm going to leave this for now, but i think the basic idea is pretty good.

---

you can unify a bunch of those variant instructions by having 4 4-bit fields (16-bit instructions). It's probably worth it to give our poor mutating algorithms more regularity. So we have:

the word size is nibbles (2 bits), represented using 2s complement. Arithmetic is signed. Von Neumann architecture (self-modifying code is possible). There is a stack and 3 registers; TOS can also be used as a register, and the second item on the stack can be accessed in register direct mode only.

Note that dynamic jumps can be accomplished by directly changing the PC.

The 16 three-operand instruction opcodes and mnemonics and operand signatures are:

The 16 two-operand instruction opcodes and mnemonics and operand signatures are:

The 16 one-operand instruction opcodes and mnemonics and operand signatures are:

The 16 zero-operand instruction opcodes and mnemonics and operand signatures are:

maybe should add more stackops...

---

wait a minute, if we are thinking about an ISA to be useful as a context for aid in compressing/decompressing data for, say, a 4k demoscene, then we can't have too many instructions because the size of the VM code in the decompressing algorithm will outweigh the compression savings. Otoh it's very important to have regularity in that case. hmm... mb get rid of the one-operand and zero-operand instructions and stuff them into the two-operand ones; that's a little more regular anyways, in the sense that it makes those instructions 'easier to find' with mutation.

so how about the following 32 instructions:

The 16 three-operand instruction opcodes and mnemonics and operand signatures are:

The 16 two-operand instruction opcodes and mnemonics and operand signatures are:

(one-operand, but still in this list)

(zero-operand, but still in this list)

also, i'm afraid that the addressing modes given above were probably too irregular for compact implementation (having addressing modes at all is bad enough). Not sure what to do about that.

also, if the goal was actually use in 4k demoscenes, we'd probably want byte words, not nibble words.

also, it may be useful to use the OVM's first-class-functions stuff, but i'm not sure if that would be worth it, either. hmm...

also, this exercise gives me some ideas that should maybe be applied to OVM:

---

hmm should look at genetic algorithms too

hmm i guess in genetic algorithms, you wouldn't want program locations to be genes; because if the two parents are doing almost the same thing at the beginning and end of the program, but one parent has a longer section in the middle, then the program locations of the stuff at the end of that parent are displaced from their corresponding program locations in the other parent; so first you want to make a mapping that maps the closely corresponding instructions in each parent.

also you'd want mutation operations not only of changing the bits of some instruction, but also inserting or deleting instructions (one or an entire segment of many instructions at once)

also maybe callable functions could serve as 'chromosomes'

also when new labels are jumped to or new functions called (maybe the functions should be called by label instead of by address?), they could cause the autocreation of a new empty memory segment (infinitely far away from all others); when code goes to the end of a memory segment that could be an implicit RET (or implicit HALT if it's the main memory segment).

---

this article [7] thinks that some languages to NOT learn are:

Dart, Objective-C, Coffeescript, Lua, and Erlang

"due to their lack of community engagement, jobs, and growth"

---

[8] points to a pastebin [9] that contains a code example from an amazing rule-based storywriting system called Inform7:

" Section 1 - Definitions

A wizard is a kind of person. A warrior is a kind of person.

A weapon is a kind of thing. A dagger is a kind of weapon. A sword is a kind of weapon. A staff is a kind of weapon.

Wielding is a thing based rulebook. The wielding rules have outcomes allow it (success), it is too heavy (failure), it is too magical (failure). The wielder is a person that varies.

To consult the rulebook for (C - a person) wielding (W - a weapon): now the wielder is C; follow the wielding rules for W.

Wielding a sword: if the wielder is not a warrior, it is too heavy. Wielding a staff: if the wielder is not a wizard, it is too magical. Wielding a dagger: allow it.

Section 2 - Example

Dungeon is a room. Gandalf is a wizard in Dungeon. Conan is a warrior in Dungeon.

The rusty dagger is a dagger in Dungeon. The elvish sword is a sword in Dungeon. The oaken staff is a staff in Dungeon.

Instead of giving a weapon (called W) to someone (called C): consult the rulebook for C wielding W; if the rule failed: let the outcome text be "[outcome of the rulebook]" in sentence case; say "[C] declines. '[outcome text].'"; otherwise: now C carries W; say "[C] gladly accepts [the W]."

The can't take people's possessions rule is not listed in any rulebook.

Test me with "give sword to gandalf / give sword to conan / give staff to conan / give staff to gandalf / give dagger to gandalf / get dagger / give dagger to conan". "

---

entirely unrelated but interesting:

"RocksDB? is used all over Facebook, powers the entire social graph. Great storage engine that pairs well with multiple DBMS: MySQL?, Mongo, Cassandra"

---

" Some years ago, I made a preliminary design for a virtual machine called "Extreme-Density Art Machine" (or EDAM for short). The primary purpose of this new platform was to facilitate the creation of extremely small demoscene productions by removing all the related problems and obstacles present in real-world platforms. There is no code/format overhead; even an empty file is a valid EDAM program that produces a visual result. There will be no ambiguities in the platform definition, no aspects of program execution that depend on the physical platform. The instruction lengths will be optimized specifically for visual effects and sound synthesis. I have been seriously thinking about reviving this project, especially now that there have been interesting excursions to the 16-byte possibility space. But I'll tell you more once I have something substantial to show. " [10]

---

"

The traditional competition categories for size-limited demos are 4K and 64K, limiting the size of the stand-alone executable to 4096 and 65536 bytes, respectively. However, as development techniques have gone forward, the 4K size class has adopted many features of the 64K class, or as someone summarized it a couple of years ago, "4K is the new 64K". There are development tools and frameworks specifically designed for 4K demos. Low-level byte-squeezing and specialized algorithmic beauty have given way to high-level frameworks and general-purpose routines. This has moved a lot of "sizecoding" activity into more extreme categories: 256B has become the new 4K. For a fine example of a modern 256-byter, see Puls by Rrrrola.

https://www.youtube.com/watch?v=R35UuntQQF8

...

The next hexadecimal order of magnitude down from 256 bytes is 16 bytes

...

A recent 23-byte Commodore 64 demo, Wallflower by 4mat of Ate Bit, suggests that this might be possible:

https://www.youtube.com/watch?v=7lcQ-HDepqk

The most groundbreaking aspect in this demo is that it is not just a simple effect but appears to have a structure reminiscent of bigger demos. It even has an end. The structure is both musical and visual. The visuals are quite glitchy, but the music has a noticeable rhythm and macrostructure. Technically, this has been achieved by using the two lowest-order bytes of the system timer to calculate values that indicate how to manipulate the sound and video chip registers. The code of the demo follows:

When I looked into the code, I noticed that it is not very optimized. The line "eor $a2", for example, seems completely redundant. This inspired me to attempt a similar trick within the sixteen-byte limitation. I experimented with both C-64 and VIC-20, and here's something I came up with for the VIC-20:

Sixteen bytes, including the two-byte PRG header. The visual side is not that interesting, but the musical output blew my mind when I first started the program in the emulator. Unfortunately, the demo doesn't work that well in real VIC-20s (due to an unemulated aspect of the I/O space). I used a real VIC-20 to come up with good-sounding alternatives, but this one is still the best I've been able to find. Here's an MP3 recording of the emulator output (with some equalization to silent out the the noisy low frequencies).

http://www.pelulamu.net/pwp/vic20/soundflower.mp3

"

" When dealing with very short programs that escape straightforward rational understanding by appearing to outgrow their length, we are dealing with chaotic systems. Programs like this aren't anything new. The HAKMEM repository from the seventies provides several examples of short audiovisual hacks for the PDP-10 mainframe, and many of these are adaptations of earlier PDP-1 hacks, such as Munching Squares, dating back to the early sixties. Fractals, likewise producing a lot of detail from simple formulas, also fall under the label of chaotic systems. "

" Some years ago, I made a preliminary design for a virtual machine called "Extreme-Density Art Machine" (or EDAM for short). The primary purpose of this new platform was to facilitate the creation of extremely small demoscene productions by removing all the related problems and obstacles present in real-world platforms. There is no code/format overhead; even an empty file is a valid EDAM program that produces a visual result. There will be no ambiguities in the platform definition, no aspects of program execution that depend on the physical platform. The instruction lengths will be optimized specifically for visual effects and sound synthesis. I have been seriously thinking about reviving this project, especially now that there have been interesting excursions to the 16-byte possibility space. But I'll tell you more once I have something substantial to show. "

aka Viznut

---

Viznut appeared to publish his EDAM (see above), renamed to IBNIZ, later in 2011:

http://viznut.fi/texts-en/ibniz.html

---

" During this period, the computer market was moving from computer word lengths based on units of 6-bits to units of 8-bits, following the introduction of the 7-bit ASCII standard. " [12]

---

https://www.youtube.com/watch?v=Qw5WLk9IeX0 shows a Sierpinski Triangle demo in 16 bytes.

see https://www.pouet.net/prod.php?which=62079 for some related code

---

" In order to let the jewels of Core Demoscene Activity shine in their full splendor, there should be a larger scale of equally glorified ways of demonstrating them. Such as interactive art. Or dynamic non-interactive. Maybe games. Virtual toys. Creative toys or games. Creative tools. Or something in the vast gray areas between the previously-mentioned categories. "

"

First phase: Toy Language. It should have an easy learning curve and reward your efforts as soon as possible. It should encourage you to experiment and gradually give you the first hints of a programming mindset. Languages such as BASIC and HTML+PHP have been popular in this phase among actual hobbyists.

Second phase: Assembly Language. While your toy language had a lot of different building blocks, you now have to get along with a limited selection. This immerses you into a "virtual world" where every individual choice you make has a tangible meaning. You may even start counting bytes or clock cycles, especially if you chose a somewhat restricted platform.

Third phase: High Level Language. After working on the lowest level of abstraction, you now have the capacity for understanding the higher ones. The structures you see in C or Java code are abstractions of the kind of structures you built from your "Lego blocks" during the previous phase. You now understand why abstractions are important, and you may also eventually begin to understand the purposes of different higher-level programming techniques and conventions.Based on this theory, I think it is a horrible mistake to recommend the modern PC platform (with Win32, DirectX?/OpenGL?, C++ and so on) to an aspiring democoder who doesn't have in-depth prior knowledge about programming. Even though it might be easy to get "outstanding" visual results with a relative ease, the programmer may become frustrated by his or her vague understanding of how and why their programs work.

The new democoders I know, even the youngest ones, have almost invariably tried out assembly programming in a constrained environment at some point of their path, even if they have eventually chosen another niche. 8-bit platforms such as C-64 or NES, usually via emulator, have been popular choices for "first hardcore coding". Sizecoding on MS-DOS has also been quite common.

Not everyone has the mindset for learning an actual "oldschool platform" on their own, however. I therefore think it might be useful to develop an "educational demoscene platform" that is easy to learn, simple in structure, fun to experiment with and "hardcore" enough for promoting a proper attitude. It might even be worthwhile to incorporate the platform in some kind of a game that motivates the player to go thru varying "challenges". Putting the game online and binding it to a social networking site may also motivate some people quite a lot and give the project some additional visibility. "

---

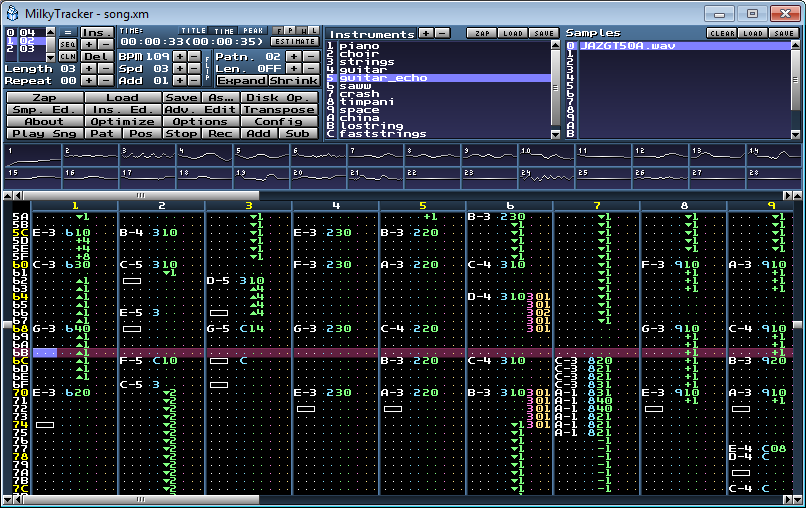

" The tracker format can be divided into 3 main concepts: samples & instruments, patterns and sequencing order. You can typically load or configure the parameters of samples that can be played. Certain trackers allow you to configure samples into instruments by defining behaviors on how the sample will be played when triggered under different pitches. The samples or instruments are then sequenced in patterns that will be played back under the stipulated tempo. And you can set the order of how the patterns will be played under the song sequence. These simple rules are the basis for most trackers that exist out there, each of them with their unique, quirky and often highly counter-intuitive menus that you'll grow to love. "

---

https://github.com/anttihirvonen/demoscene-starter-kits

"

Beginners

If you have little to none graphics programming experience, Processing is the best way to get started. It has an intuitive API, good documentation, lots of examples and most importantly, you can get your first visuals on screen in 10 minutes. Also, there's very little boilerplate code you need to write – instead, you can focus on developing content for your awesome first demo! "

" Getting started with Windows and Visual Studio

These kits contain examples on raw WinAPI? programming for size limited productions. Code is compiled using Microsoft Visual Studio 2013 and the compiled binaries are compressed with Crinkler to achieve minimal file sizes. Available kits

Windows1k_OpenGL: Opens an OpenGL window and draws an effect using a GLSL shader

Links

Iñigo Quílez's excellent sizecoding examples

Graphics Size Coding blog

in4k wiki (cached) a somewhat dated but interesting size coding resourceGetting the software

You can download Visual Studio 2013 Express for free

"

---

another demoscene keyword 'procedural' eg 'procedural graphics' 'procedural music'

another keyword, probably more useful for our purposes, is 'sizecoding'

---

is a blog focusing on sizecoding graphics techniques. It has a sidebar of links that i haven't explored yet.

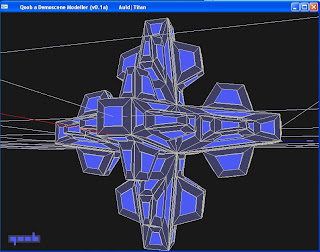

the author makes a graphics library for demos/intros called Qoob. The idea is that, for small CGI objects, storing the list of commands to generate the object is more compact than storing the mesh and compressing it. Qoob can store this in 30 bytes:

older posts eg [14] have some good 'hello world'-ish stuff for starting GPU stuff

---

" The things that excite me the most about Rust are

The borrow checker, which greatly improves memory safety (SEGFAULTs no more!),

Immutability (const) by default,

Intuitive syntactic sugar such as pattern matching,

No built-in implicit conversions between (arithmetic) types."

---

demoscene 4k and 64k ("4k and 64k intros") audio tools:

demoscene <=64k, sizecoding resources:

---

http://xmunkki.org/wiki/doku.php?id=projects:il4

---

2004 Forth JIT Compiler for 4K intros

http://neoscientists.org/~plex/win4k/index.html

---

i dont think i'll ever need this again but noting it just in case:

Floating Point Routines for the 6502 (1976) (6502.org)

pascalmemories on May 9, 2015 [-]

Around 768 bytes. The golden days of tight code and amazing minds pulling together cool code that set the framework for floating point on 'home' computers. A great reference point and piece of history.

edit: I see from the link in the comment posted by ddingus that there is also an errata with a fix for the bug in the original code. http://codebase64.org/doku.php?id=base:errata_for_rankin_s_6502_floating_point_routines

StillBored on May 10, 2015 [-]

Well, $1fee-$1d00=750 bytes of code, + the 14 zero page locations. Its really the zero page that makes the processor function at all. When I go back to 6502 assembly, it amazes me what was possible with a processor that basically had 3 independent (because they could not be combined) 8 bit registers and a 256 byte stack.

There are some C compilers (http://www.6502.org/tools/lang/) for the processor, but it quickly becomes obvious just how poorly C fits. The 6502 is basically a processor that forces one to code with a bytecode + interpreter or in native assembly.

Which brings me back to the zero page. It is is both the solution to getting anything complex done. And simultaneously the problem because there isn't a good way to allocate space there (because the zero page locations are encoded directly in the instructions).

...it precludes easily sharing routines from different projects

jjwiseman on May 9, 2015 [-]

As a reminder, the 6502 doesn't even have integer multiply or divide.

ddingus on May 9, 2015 [-]

Over the years, some pretty good routines have been developed.

http://codebase64.org/doku.php?id=base:6502_6510_maths

Tons of great little tricks in there. 6502 math coding is kind of fun in this way.

A look at old games, such as "Elite" ( http://www.iancgbell.clara.net/elite/) show many trade offs being used to make simple wireframe 3D plausible at some few frames per second.

---

"a plugin architecture based on the ideas behind Redux with a very small core (https://repl.it/blog/ide). Everything in the IDE is a plugin, which is simply a reducer, a receiver, and a React component. The reducer builds up the state required for the plugin to work, the receiver dispatches actions in response to other actions flowing through the system, and the component renders" [21]

---

"...tracker music. It’s pretty much like MIDI2 but with also samples packed in the file..."

" I used Windows’ built-in gm.dls MIDI soundbank file (again, a classic trick) to make a song with MilkyTracker? in XM module format. This is the format that was used also for many MS-DOS demoscene productions back in the 90s. "

---

comments on:

https://plg.uwaterloo.ca/~cforall/features

zestyping 1 day ago

| parent | flag | favorite | on: C for All |

There are a lot of features thrown into this language that don't seem worth the learning costs they incur. What are the problems you're really trying to fix? Focus on the things that are really important and impactful, and solve them; don't waste time on quirky features that just make the syntax more alien to C programmers.

kragen 1 day ago [-]

Yeah, Ping, I agree. It reads like they missed the key lesson of C — in Dennis Ritchie's words, "A language that doesn't have everything is actually easier to program in than some that do." And some of the things they've added vitiate some of C's key advantages — exceptions complicate the control flow, constructors and destructors introduce the execution of hidden code (which can fail), and even static overloading makes it easy to make errors about what operations will be invoked by expressions like "x + y".

An interesting exercise might be to figure out how to do the Golang feature set, or some useful subset of it, in a C-compatible or mostly-C-compatible syntax.

I do like the returnable tuples, though, and the parametric polymorphism is pretty nice.

reply

dmitrygr 1 day ago [-]

exceptions because in embedded contexts they may not always be a good idea (and C targets such contexts). overloading because it is too easy to abuse and as such it gets abused a lot by those who do not know better. The rest of us are then stuck decoding what the hell "operator +" means when applied to a "serial port driver" object

reply

reza_n 1 day ago [-]

Constructs like closures come at a cost. Function call abstraction and locality means hardware cannot easily prefetch, instruction cache misses, data cache misses, memory copying, basically, a lot of the slowness you see in dynamic languages. The point of C is to map as close to hardware as possible, so unless these constructs are free, better off without them and sticking to what CPUs can actually run at full speed.

reply

alerighi 1 day ago [-]

Clousure costs a lot if we are talking of real closures, that capture variables from the scope where they are defined, because you need to save somewhere that information, so you need to alloc an object with all the complexity associated.

And it can easily get very trick in a language like C where you don't have garbage collection and you have manually memory management, it's easy to capture things in a closure and then deallocate them, imagine if a closure captures a struct or an array that is allocated on the stack of a function for example.

I think we don't need closures in C, the only thing that I think we would need is a form of syntactic for anonymous function, that cannot capture anything of course, it will do most of the things that people uses closure for and doesn't have any performance problems or add complexity to the runtime.

reply

enriquto 1 day ago

| parent | flag | favorite | on: C for All |

I'm all for the evolution of C, but this list...

1) has some downright idiotic things (exceptions, operator overloading)

2) has a few reasonable, but mostly inconsequential things (declaration inside if, case ranges)

3) is missing a few real improvements (closures, although it is not clear whether the "nested routines" can be returned)

(but note another comment which said, "Now if you want to talk about something that actually makes manual memory management a total nightmare, look at the OP's suggestion for adding closures to C.")

bdamm 1 day ago [-]

Agree 100%. Improvements to C would be things like removing "undefined behavior", not adding more syntax sugar. If anything, C's grammar is already too bloated. (I'm looking at you, function pointer type declarations inside anonymized unions inside parameter definition lists.)

reply

dmitrygr 1 day ago [-]

Couldn't agree more. and i'll add another:

the suggested syntax is ridiculous. What is this punctuation soup?

void ?{}( S & s, int asize ) with( s ) { // constructor operator

void ^?{}( S & s ) with( s ) { // destructor operator

^x{}; ^y{}; // explicit calls to de-initializereply

ori_b 1 day ago [-]

Fair point, but changing the language won't change the amount of global state. and the associated complexity, nor will it make subsystem supervision work correctly. Changing the language will not prevent unbounded recursion or the associated stack overflows and subsystem failures. Changing the language will not fix mis-analysis of task switching overhead. And changing the language will not fix manufacturing issues with the PCBs.

As far as I'm aware, one of the very few toolchains that even try to improve on this over C are Ada/SPARK.

reply

vvanders 1 day ago [-]

> but changing the language won't change the amount of global state

Global mutable state is marked as Unsafe in Rust.

> nor will it make subsystem supervision work correctly

Erlang is built specifically around this concept.

Perfect is the enemy of good here, throwing out a whole language due to one case doesn't help anyone.

reply

ori_b 1 day ago [-]

> Global mutable state is marked as Unsafe in Rust.

It's also the simplest way to avoid dynamic allocation and the associated OOM issues. So, short of doing static analysis to bound heap usage at compile time, that makes things worse.

And Toyota already got the static analysis wrong for their stack usage. At least globals will fail to compile if they won't fit.

reply

pjmlp 1 day ago [-]

Even ANSI/ISO C working group acknowledges that “thrust the programmer” doesn’t quite work.

reply

gmueckl 1 day ago [-]

This is because trusting the programmer is fundamentally wrong, no matter the programming language. In any good development process the actual coding is the least amount of work - for a reason.

reply

pjmlp 1 day ago [-]

This was the statement I was referring to.

<quote>

Spirit of C:

a. Trust the programmer.

b. Do not prevent the programmer from doing what needs to be done.

c. Keep the language small and simple.

d. Provide only one way to do an operation.

e. Make it fast, even if it is not guaranteed to be portable.

The C programming language serves a variety of markets including safety-critical systems and secure systems.

While advantageous for system level programming, facets (a) and (b) can be problematic for safety and security.

Consequently, the C11 revision added a new facet:

</quote>

http://www.open-std.org/jtc1/sc22/wg14/www/docs/n2139.pdf

reply

pjc50 1 day ago [-]

The trouble with a "safer C variant" is that it must remove features, or at least more heavily constrain programs to a safer subset of the language. This makes it not backwards-compatible.

I think the only successful "subset of C" is MISRA.

reply

pjmlp 1 day ago [-]

Maybe Frama-C as well.

reply

fao_ 1 day ago [-]

I remember reading a paper from around 2007 that asserted that most of MISRA did not catch or significantly prevent major bugs in code, indeed it asserted that much of the standard was useless. I am failing to find it now, as I cannot remember what terms I used, and I am not at a library computer and therefore I cannot search behind paywalls beyond abstracts.

reply

zzzcpan 1 day ago [-]

Cannot say that this is unexpected, but I was interested to find some papers, presumably these two: Assessing the Value of Coding Standards: An Empirical Study [1], Language subsetting in an industrial context: A comparison of misra c 1998 and misra c 2004 [2]

[1] http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.559.7854&rep=rep1&type=pdf

[2] http://leshatton.org/Documents/MISRA_comp_1105.pdf

reply

duneroadrunner 1 day ago [-]

SaferCPlusPlus?[1], for example, is a safe subset of C++ that has compatible safe substitutes for C++'s (and therefore C's) unsafe elements. So migrating existing C/C++ code generally just requires replacing variable declarations, not restructuring the code.

For C programs, one strategy is to provide a set of macros to be used as replacements for unsafe types in variable declarations. These macros will allow you, with a compile-time directive, to switch between using the original unsafe C elements, or the compatible safe substitutes (which are C++ and require a C++ compiler).

The replacement of unsafe C types with the compatible substitute macros can be largely automated, and there is actually a nascent auto-translator[2] in the works. (Well, it's being a bit neglected at the moment :)

Custom conventions using macros to improve code quality are not that uncommon in organized C projects. Right? But this one can (optionally, theoretically) deliver complete memory safety. So you might imagine, for example, a linux distribution providing two build versions, where one is a little slower but memory safe.

[1] shameless plug: https://github.com/duneroadrunner/SaferCPlusPlus

[2] https://github.com/duneroadrunner/SaferCPlusPlus-AutoTranslation

reply

kerkeslager 1 day ago [-]

What makes you think that a safer C variant would win the hearts of UNIX kernels and embedded devs any more than C++ (which started as just a C variant).

reply

BoorishBears? 1 day ago [-]

Simplicity, no STL or templating, no OOP connotations

I don’t necessarily think it would, but if it did, those would all be reasons

reply

BruceIV?