![[Home]](http://www.bayleshanks.com/cartoonbayle.png)

http://stackoverflow.com/questions/7823725/what-kind-of-garbage-collection-does-go-use

---

" All about garbage collectors

highest-paying-dirty-job-1

You see, the CPU problem, and all the CPU-bound benchmarks, and all the CPU-bound design decisions–that’s really only half the story. The other half is memory. And it turns out, the memory problem is so vast, that the whole CPU question is just the tip of the iceberg. In fact, arguably, that entire CPU discussion is a red herring. What you are about to read should change the whole way you think about mobile software development.

In 2012, Apple did a curious thing (well, unless you are John Gruber and saw it coming). They pulled garbage collection out of OSX. Seriously, go read the programming guide. It has a big fat “(Not Recommended)” right in the title. If you come from Ruby, or Python, or JavaScript?, or Java, or C#, or really any language since the 1990s, this should strike you as really odd. But it probably doesn’t affect you, because you probably don’t write ObjC? for Mac, so meh, click the next link on HN. But still, it seems strange. After all, GC has been around, it’s been proven. Why in the world would you deprecate it? Here’s what Apple had to say:

We feel so strongly about ARC being the right approach to memory management that we have decided to deprecate Garbage Collection in OSX. - Session 101, Platforms Kickoff, 2012, ~01:13:50

The part that the transcript doesn’t tell you is that the audience broke out into applause upon hearing this statement. Okay, now this is really freaking weird. You mean to tell me that there’s a room full of developers applauding the return to the pre-garbage collection chaos? Just imagine the pin drop if Matz announced the deprecation of GC at RubyConf?. And these guys are happy about it? Weirdos.

Rather than write off the Apple fanboys as a cult, this very odd reaction should clue you in that there is more going on here than meets the eye. And this “more going on” bit is the subject of our next line of inquiry.

So the thought process goes like this: Pulling a working garbage collector out of a language is totally crazy, amirite? One simple explanation is that perhaps ARC is just a special Apple marketing term for a fancypants kind of garbage collector, and so what these developers are, in fact applauding–is an upgrade rather than a downgrade. In fact, this is a belief that a lot of iOS noobs have. ARC is not a garbage collector

So to all the people who think ARC is some kind of garbage collector, I just want to beat your face in with the following Apple slide:

This has nothing to do with the similarly-named garbage collection algorithm. It isn’t GC, it isn’t anything like GC, it performs nothing like GC, it does not have the power of GC, it does not break retain cycles, it does not sweep anything, it does not scan anything. Period, end of story, not garbage collection.

The myth somehow grew legs when a lot of the documentation was under NDA (but the spec was available, so that’s no excuse) and as a result the blogosphere has widely reported it to be true. It’s not. Just stop. GC is not as feasible as your experience leads you to believe

So here’s what Apple has to say about ARC vs GC, when pressed:

At the top of your wishlist of things we could do for you is bringing garbage collection to iOS. And that is exactly what we are not going to do… Unfortunately garbage collection has a suboptimal impact on performance. Garbage can build up in your applications and increase the high water mark of your memory usage. And the collector tends to kick in at undeterministic times which can lead to very high CPU usage and stutters in the user experience. And that’s why GC has not been acceptable to us on our mobile platforms. In comparison, manual memory management with retain/release is harder to learn, and quite frankly it’s a bit of a pain in the ass. But it produces better and more predictable performance, and that’s why we have chosen it as the basis of our memory management strategy. Because out there in the real world, high performance and stutter-free user experiences are what matters to our users. ~Session 300, Developer Tools Kickoff, 2011, 00:47:49

But that’s totally crazy, amirite? Just for starters:

It probably flies in the face of your entire career of experiencing the performance impact of GCed languages on the desktop and server

Windows Mobile, Android, MonoTouch, and the whole rest of them seem to be getting along fine with GCSo let’s take them in turn. GC on mobile is not the same animal as GC on the desktop

I know what you’re thinking. You’ve been a Python developer for N years. It’s 2013. Garbage collection is a totally solved problem.

Here is the paper you were looking for. Turns out it’s not so solved: Screen Shot 2013-05-14 at 10.15.29 PM

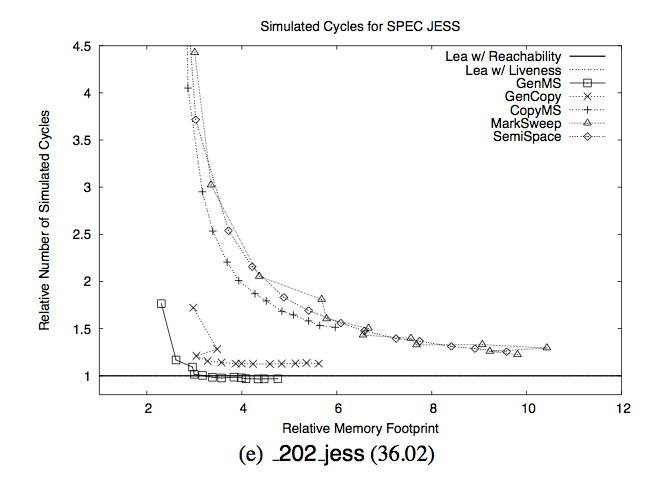

If you remember nothing else from this blog post, remember this chart. The Y axis is time spent collecting garbage. The X axis is “relative memory footprint”. Relative to what? Relative to the minimum amount of memory required.

What this chart says is “As long as you have about 6 times as much memory as you really need, you’re fine. But woe betide you if you have less than 4x the required memory.” But don’t take my word for it:

In particular, when garbage collection has five times as much memory as required, its runtime performance matches or slightly exceeds that of explicit memory management. However, garbage collection’s performance degrades substantially when it must use smaller heaps. With three times as much memory, it runs 17% slower on average, and with twice as much memory, it runs 70% slower. Garbage collection also is more susceptible to paging when physical memory is scarce. In such conditions, all of the garbage collectors we examine here suffer order-of-magnitude performance penalties relative to explicit memory management.

Now let’s compare with explicit memory management strategies:

These graphs show that, for reasonable ranges of available memory (but not enough to hold the entire application), both explicit memory managers substantially outperform all of the garbage collectors. For instance, pseudoJBB running with 63MB of available memory and the Lea allocator completes in 25 seconds. With the same amount of available memory and using GenMS, it takes more than ten times longer to complete (255 seconds). We see similar trends across the benchmark suite. The most pronounced case is 213 javac: at 36MB with the Lea allocator, total execution time is 14 seconds, while with GenMS, total execution time is 211 seconds, over a 15-fold increase.

The ground truth is that in a memory constrained environment garbage collection performance degrades exponentially. If you write Python or Ruby or JS that runs on desktop computers, it’s possible that your entire experience is in the right hand of the chart, and you can go your whole life without ever experiencing a slow garbage collector. Spend some time on the left side of the chart and see what the rest of us deal with. "

-- http://sealedabstract.com/rants/why-mobile-web-apps-are-slow/

--

" I’m a contributor to the Rust project, whose goal is zero-overhead memory safety. We support GC’d objects via “@-boxes” (the type declaration is “@T” for any type T), and one thing we have been struggling with recently is that GC touches everything in a language. If you want to support GC but not require it, you need to very carefully design your language to support zero-overhead non-GC’d pointers. It’s a very non-trivial problem, and I don’t think it can be solved by forking JS.

" -- http://sealedabstract.com/rants/why-mobile-web-apps-are-slow/

--

upvote

alecbenzer 1 day ago

| link |

What exactly is the argument for garbage collection over RAII-style freeing? Even in garbage-collected languages, you still need to do things like close file objects; the runtime doesn't do so automatically when it detects that there are no stack references to the file. Same goes for unlocking mutexes, or any of the other things that RAII tends to address.

Is it just that maintaining ownership semantics for heap memory tends to be more complicated than doing the same for file objects (much more rarely shared, I'd guess) or mutexes (not sure if "sharing" is even sensibly defined here).

reply

upvote

rbehrends 1 day ago

| link |

There are a number of reasons why you may want garbage collection/automatic memory management over RAII:

reply

---

http://pivotallabs.com/why-not-to-use-arc/

"

I was pretty happy when we switched from refcounting to a tracing GC; the refcounting was already somewhat optimized (didn't emit any obviously-duplicate increfs/decrefs), but removing the refcounting operations opened up a number of other optimizations. As a simple example, when refcounting you can't typically do tail call elimination because you have to do some decrefs() at the end of the function, and those decrefs will also typically keep the variables live even if they didn't otherwise need to be. It was also very hard to tell that certain operations were no-ops, since even if something is a no-op at the Python level, it can still do a bunch of refcounting. You can (and we almost did) write an optimizer to try to match up all the incref's and decref's, but then you have to start proving that certain variables remain the same after a function call, etc.... I'm sure it's possible, but using a GC instead made all of these optimizations much more natural.

" -- https://mail.python.org/pipermail/python-dev/2014-April/133788.html

---